VIVO 2016: Advanced Data Sharing & the Risks of ‘Garbage In, Gospel Out.’

As fall arrives, I’m looking back at some of the conferences and meetings that we attended over the summer. The VIVO annual conference was in Denver in August, and for us, it was both successful and enlightening. The conference centered on people interesting in or already using VIVO and offered a collaborative atmosphere to talk about adopting and implementing VIVO and the opportunities created by advancing data sharing.

Our Co-Founder and President, Andrea Michalek, joined Bruce Herbert, Director, Scholarly Communications, at Texas A&M and Laurel Haak, Executive Director of ORCID for the panel presentation, “Using VIVO and Other Tools to Achieve a Strategic Vision.”

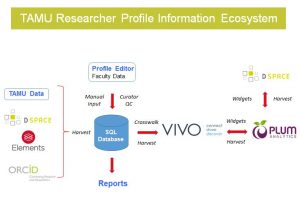

The panel showed the interconnections between VIVO, PlumX, ORCID and other parts of the Texas A&M research ecosystem.

See the entire slide presentation here.

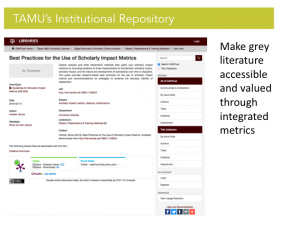

Among the many points about metrics at Texas A&M that Dr. Herbert made, one that particularly resonated was about making grey literature accessible and valuable through the use of metrics. This segment of the publishing universe can be otherwise under the radar. Altmetrics can bring it forward and highlight its utility.

From many chats in our booth chats we discovered that participants had an eager interest in altmetrics and these chats spawned a number of thoughtful discussions on metrics and metrics design.

One of our booth visitors raised an interesting discussion that centered around avoiding the mistakes of the past. The chat segued into the hazards of a single scoring approach to altmetrics, pointing out that we all need to be cognizant of using the right approach to evaluating research or we all will suffer the consequences.

This conversation was summed up well in one of our favorite tweets of the conference from Dario Taraborelli, head of research for Wikimedia Foundation.

Garbage in, gospel out. You have a huge responsibility if you design metrics shaping how research is read, cited, funded #vivo16 #altmetrics

— Dario Taraborelli (@ReaderMeter) August 19, 2016

What are your thoughts on the metrics for evaluating research? What measures of interest make a difference to you? Tweet us at @PlumAnalytics.