What Your Citations Aren’t Telling You About Impact: Announcing Clinical Citations

From timeliness to self-citations, much has been written about why citation metrics are flawed — but that is not what this blog post is about. This blog post is about moving the conversation about citations forward to include clinical impact.

Clinical research does not get cited as much as basic science. This was recognized as far back as 1997, in the Per O Seglen article, “Why the impact factor of journals should not be used for evaluating research” when he stated:

…clinical medicine draws heavily on basic science, but not vice versa. The result is that basic medicine is cited three to five times more than clinical medicine, and this is reflected in journal impact factors.

Why is this a problem?

- Researchers have difficulty getting funding.

- Early-career researchers opt out of translational medicine.

- Hospitals and research institutions cannot showcase their talents.

- Clinical journals can lack prestige.

It hurts us all when our researchers don’t want to turn basic science into ways to treat illnesses and disease. Or, when our doctors pioneer new treatments but have no motivation to publish them. Too often we wring our hands over the problems of citation analysis but collectively shrug our shoulders and decide there is nothing we can do about it.

We want to measure clinical impact. We want to help researchers, institutions and publishers understand what is impactful in the clinical realm.

Clinical Citations

We pioneered a new type of citation, a Clinical Citation, to understand the impact and tell the stories of clinical research. Clinical Citations are references to research in a variety of clinical sources. For example, these could include:

- Clinical Alerting Services

- Clinical Guidelines

- Systematic Reviews

- Clinical Trials

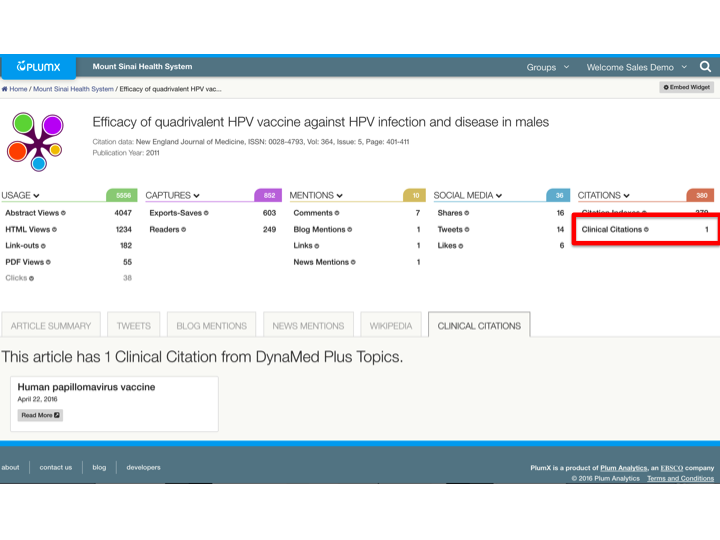

You can see in the screenshot below, this research from Icahn School of Medicine at Mount Sinai has affected the clinical advice on the use of the human papillomavirus vaccine in males. This has been a highly debated topic, with the CDC recommendation for it coming as recently as December, 2015.

This research got a lot of attention as evidenced by the social media, but an important story that could otherwise be missed is its effect on clinical advice.

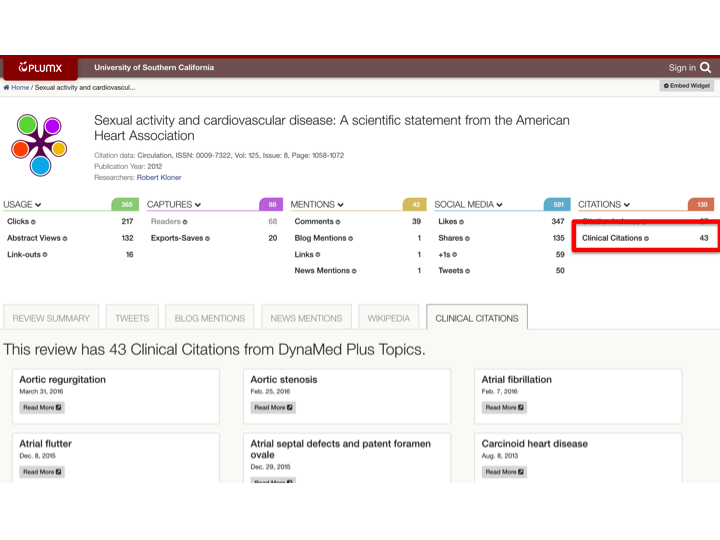

With Clinical Citations you can also start to see a picture emerge of how much your researchers are contributing to association guidelines or statements. For example, a researcher from the University of Southern California contributed to this American Heart Association (AHA) Scientific Statement on Sexual Activity and Cardiovascular Disease.

The fact that this is referenced in 43 clinical topics is quantifiable information supporting the fact that researchers are impacting clinical information at USC.

The PlumX Suite has started gathering these clinical citations from a clinical alerting service, DynaMed Plus® with an editorial board of 500 physicians who analyze many sources, including 500 journals and 120 guideline organizations that make clinical recommendations.

Clinical Impact Across Groups

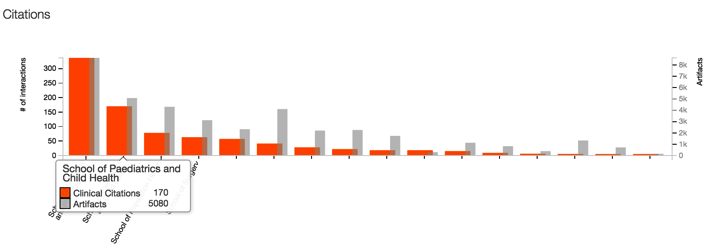

You can also use all of the analysis tools in the PlumX Suite with Clinical Citations. For example, in the graph below, this PlumX customer could look at its faculty departments to determine more about clinical impact.

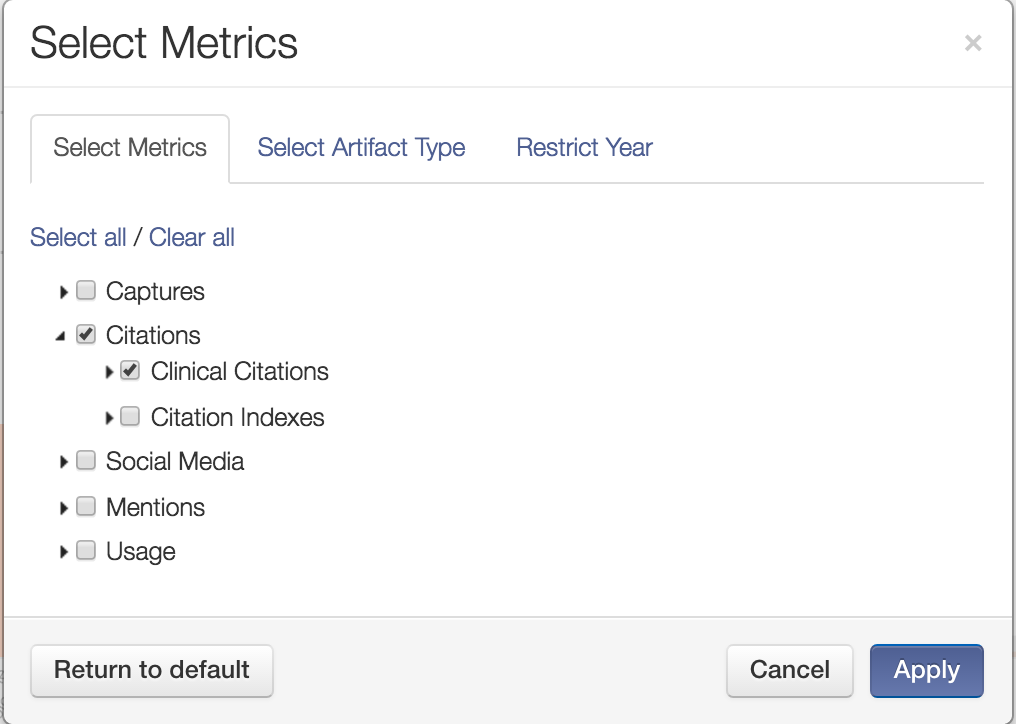

First, you can filter the analytics for just Clinical Citations.

Then, you can compare based on just Clinical Citations and start to see the overall picture of clinical impact in different areas emerge. (NOTE: The names of the specific departments have been blanked out to preserve anonymity.)

Clinical citations via DynaMed Plus is an important step toward understanding clinical impact. This proves the concept and helps our customers begin to see patterns of where their researchers are affecting clinical guidelines. We are working hard to expand and define this important new category of metrics.

References

Seglen, P. O. (1997). Why the impact factor of journals should not be used for evaluating research. BMJ, 314, 497. 10.1136/bmj.314.7079.497