Wikipedia & Altmetrics: Calculating Mention Metrics

This is the second part of a two-part blog post about PlumX and Wikipedia mentions. In the first, we explored the meaning and possible interpretations of Wikipedia mentions of scholarly output, both on an individual piece of research and when we aggregated them. In the second, we discuss the alternative ways to calculate such a metric, and our approach at Plum Analytics.

What is the best way to calculate a mention metric?

The way that works for ALL of your research.

Before diving into the details of the different approaches for detecting and counting Wikipedia mentions of academic works, it’s important to discuss what works might get referenced on Wikipedia. It’s a broader range than one might encounter in peer-reviewed arenas. Wikipedia posts might refer and link to many different forms of research: peer-reviewed articles, theses, data sets or even working papers, reports or blog posts. If your altmetrics platform doesn’t track these kinds of research output, you’re likely not getting the full picture of your research’s reach.

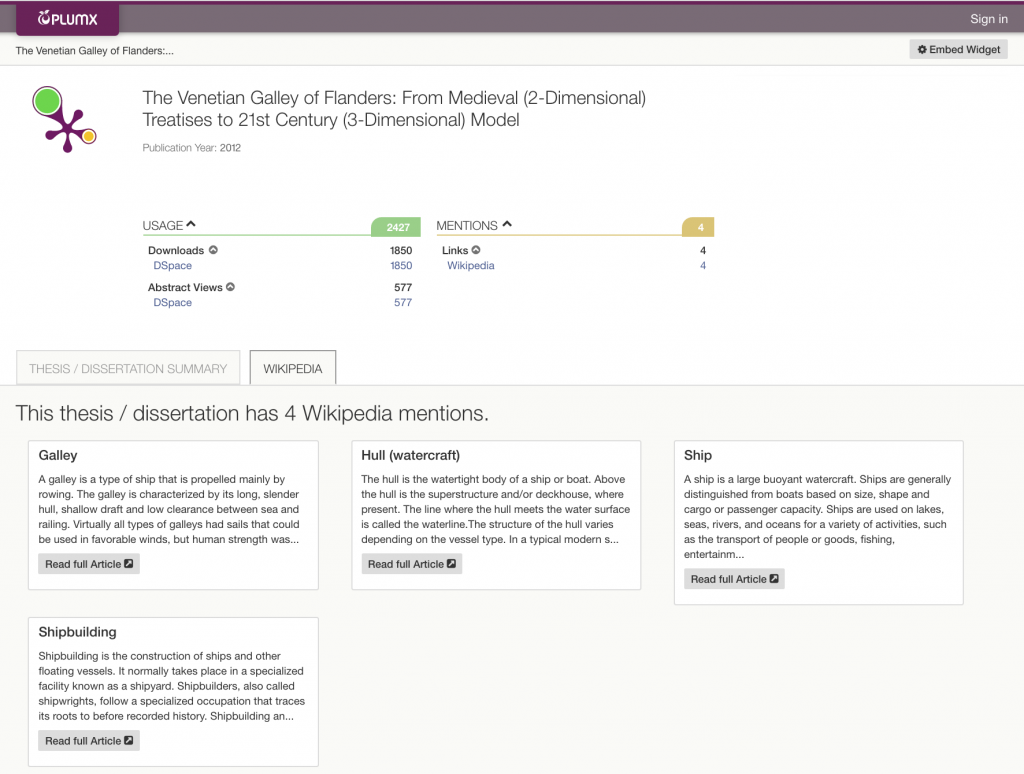

For example, let’s look at the following thesis. The author created a digital 3-D model of a 15th century merchant galley ship using information from texts dating to that era. The visual animation of this model is included in such broad Wikipedia posts as those addressing the generic concepts of ‘Ships’ and ‘Shipbuilding’, gaining high visibility for a Masters thesis in Anthropology.

Many altmetric tools would fail to track this thesis and any impact or attention around it at all, since it is published in only one place: Texas A&M University’s Open Access institutional repository (IR), an implementation of DSpace. The Wikipedia article links to the thesis in DSpace using the IR handle – which PlumX tracks for its customers.

This means that PlumX will find Wikipedia mentions that other platforms don’t, particularly for research types that don’t get a lot of measurable metrics to analyze and report on in the first place. Examples include theses like the one above, book chapters, books, and technical reports.

What is the best way to calculate a Wikipedia mention metric?

We also examined what possible approaches to finding Wikipedia references will best balance finding as many references as possible without finding too many false positives. Let’s consider three alternative strategies that have been proposed:

- Mining search engine results

- Watching Wikipedia pages for citation changes

- Mining the full text of all Wikipedia pages.

At Plum, we found the third alternative to be the most complete while still scaling without too many false positives.

Alternative 1: Search Engine Results

In their 3:AM blog post on the subject, Mike Thelwall and Kayvan Kousha from University of Wolverhampton discuss relying on phrase searching on search engines like Bing and Google. This depends heavily on the uniqueness of the work’s title, and on how an article is cited. For example, consider this 2010 review, “Conjuctivitis”, from the journal “Pediatrics in Review”. Because of the generic name of the work, a Google search of the title filtered to wikipedia.org returns over 3,000 results. Adding the authors’ names as they appear in the paper returns zero results. Through trial and error, one can find this reference using just the authors’ last names, because their first names are abbreviated in the Wikipedia page citation. Even then, this approach would fail to disambiguate between this review and any other paper these authors had written with the word “Conjuctivitis” in the title. The problem would be further compounded if both authors had popular names that are harder to disambiguate.

This approach is also dependent on third parties’ search algorithms, which may change at any time, and on how often these third parties recrawl Wikipedia pages.

While this alternative might work for a small set of works with some manual query and result curation, an automated, reliable approach is necessary to find and disambiguate references for a large set of academic outputs.

Alternative 2: Watching Wikipedia Page Citation changes

Wikipedia’s platform provides features for users to insert citations inline into the text of the Wikipedia article. If these are properly entered and formatted, they will appear automatically in the References section at the bottom of the Wikipedia page. Proper formatting includes providing a URL, DOI or link to the referenced work.

Using the Venetian Galley Masters thesis example referenced at the start of this post, you could use the approach in Alternative 1 to find references to this work: a Google search finds these references reliably. However, Alternative 2 would miss these references completely since the visualization is included as a link in a note, not a citation.

Alternative 3: Mining Wikipedia Page Full Text for Links and Identifiers

PlumX mines Wikipedia’s full text and references to find any links to the scholarly works we track, whether Wikipedia authors reference the work by DOI, PMID, or by URL. We track many different links to the same work – from the IR handle, to pre-print and open-access repository versions, to the many versions of a publisher URL – so that any references to any of those links will associate the Wikipedia page reference with your work.

…but can we do better?

For references that don’t meet Wikipedia’s best practices for citations and don’t link to an identifier or URL to the original work, Alternative #1 can actually find results that are missed by approaches 2 and 3. Further work into mining references, and eventually full text, for possible research artifact titles and authors that can be resolved to known pieces of research can help to close the gap in finding all research references in Wikipedia, all the time.

Another aspect worth further investigation is the duration for which a reference was part of a Wikipedia page, and when it was cited. A citation immediately after publication, that gets removed quickly by another Wikipedia editor, might show a distinctly different pattern of attention or influence than one that took longer from publication to be referenced, but remains as a citation for months or years.